THE CHALLENGE:

When we initially started building the Alis chatbot, we were thrilled by the idea of creating a conversational assistant that could hold meaningful, human-like interactions over time. However, during the first phase of development, we faced a major challenge. The chatbot could not maintain context in long conversations. After several back-and-forth exchanges, it began to lose track of what had been said earlier. We initially tried to solve this through prompt tuning alone by adjusting instructions, refining model prompts, and optimising the way context was fed into the model. But none of this yielded any satisfactory result. The bot’s performance continued to drop as conversations grew longer.

THE REALISATION:

This was when we realised that context handling was not just a matter of prompt design - it was an architectural problem. The breakthrough came when we began handling memory as a structured, layered system rather than a flat history of messages.

THE REWIRING:

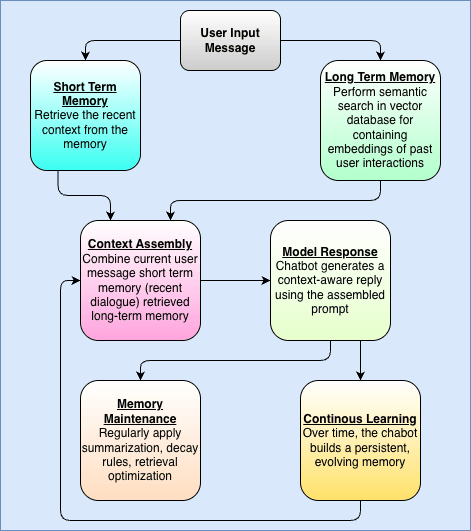

We then decided to handle the two main layers of memory differently. We used the short-term memory, to handle recent messages in the ongoing conversation. This resulted in the chatbot responding naturally to immediate questions, much like a person remembering what was just said a few sentences before. The second layer was long-term memory, and we used a vector database to store this externally. This layer enabled the chatbot to recall information from previous sessions, such as user preferences, recurring topics, or previous decisions.

For example, if a user had earlier told Alis that they preferred weekly summaries rather than daily notifications, the chatbot could recall this preference in future conversations without being reminded. This continuity made interactions feel far more personalised.

THE PROCESS FLOW:

The process worked through semantic search. Every time a user sent a message, the system would embed the new input and search the vector database for the most relevant memories based on meaning, not just keywords. These retrieved memories were then included in the model’s prompt before generating a response. This retrieval-augmented setup gave the chatbot the benefit of context continuity without overloading the model’s limited context window.

THE EXTRA MILE:

We also added an important post-conversation step. After each exchange, the chatbot summarised or extracted key information and stored it back into the memory database. For instance, if a user mentioned that they were planning a vacation next month, Alis would store that fact. Weeks later, when the user returned, Alis could naturally ask, "How was your vacation?" This gave the chatbot a sense of long-term awareness and emotional connection.

THE RESULT:

Over time, this system evolved into a dynamic, self-improving memory. We also learned the importance of managing what to remember and what to forget. Not every detail deserves to be stored forever. Some memories fade naturally - what we call "memory decay." For example, if a user’s temporary request becomes outdated, the system automatically deprioritises it. This keeps the memory base lean, relevant, and fast.

THE LESSON:

In the end, our biggest lesson was that chatbot memory is not about how much data you can store or how large your context window is. It’s about designing smart processes for retrieval, summarization, and decay. A well-designed memory system makes a chatbot more adaptive, personal, and contextually aware, allowing it to grow with each user interaction.

FINALLY:

At Cubet, structured processes like these help us uncover root causes quickly and implement scalable solutions. The Alis experience taught us that thoughtful memory design can turn a basic conversational model into a truly engaging digital companion - one that not only talks but also remembers, learns, and evolves with every conversation.

Want to build AI chatbots that go beyond conversation and actually remember?

Let’s talk about how Cubet can help you design intelligent, memory-driven conversational systems made for real-world impact.

Have a project concept in mind? Let's collaborate and bring your vision to life!

Connect with us & let’s start the journey

Share this article

Get in touch

Kickstart your project

with a free discovery session

Describe your idea, we explore, advise, and provide a detailed plan.