AI conversations today are full of new terms, but few cause as much confusion as AI agents and Agentic AI. They sound similar, they’re often used interchangeably, and on the surface, they seem to point to the same idea: autonomous AI systems.

But they’re not the same. And if you’re building real systems, especially in healthcare, enterprise operations, or customer-facing platforms, this distinction isn’t theoretical. It shows up quickly in what your system can and cannot handle.

Let’s break this down in a way that actually makes sense.

AI Agents: Good at Tasks, Limited by Design

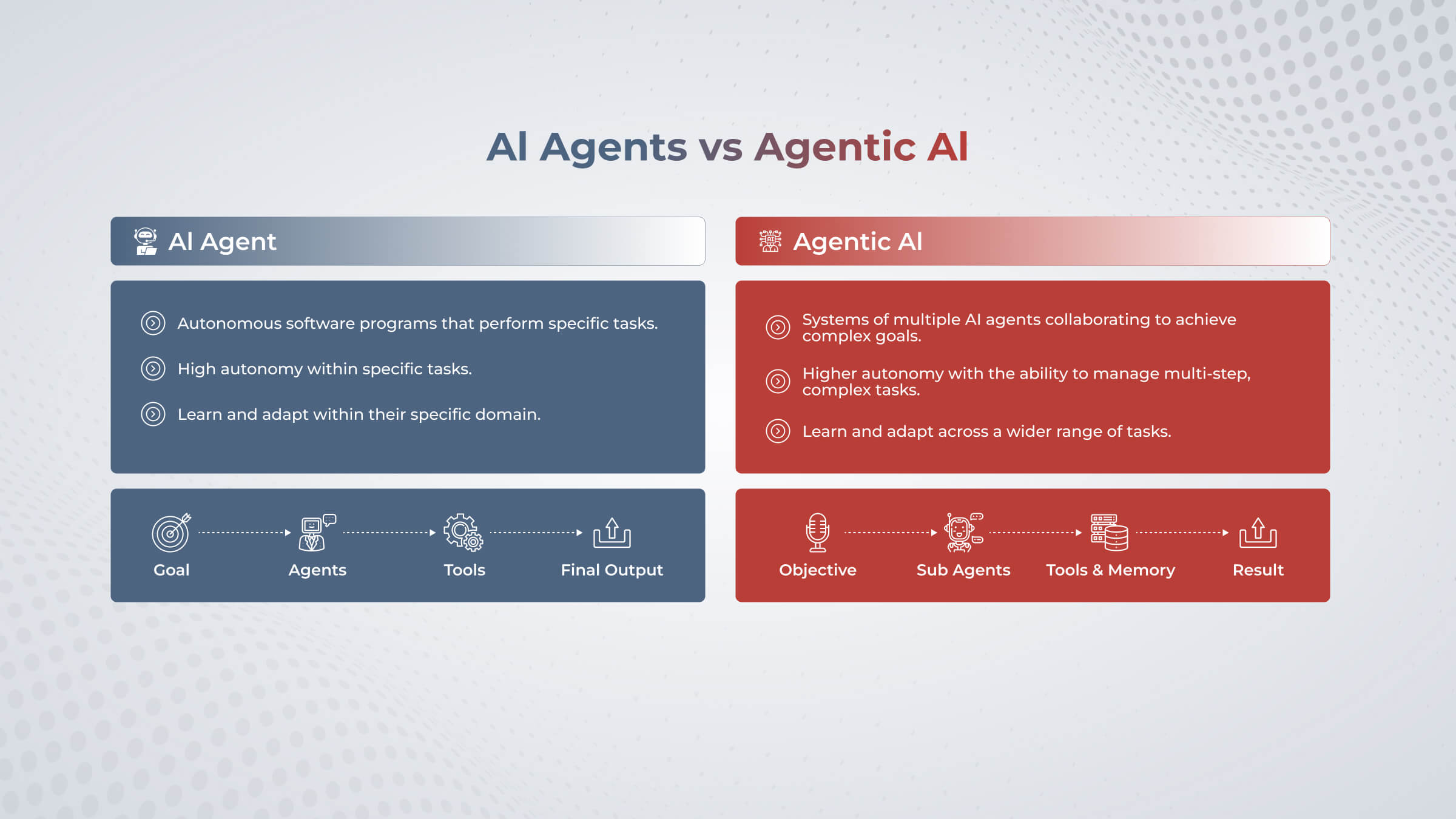

Most organisations are already using AI agents today, even if they don’t label them that way. An AI agent is a system designed to perform a specific task on its own. You give it a job, define its boundaries, connect it to the right data or tools, and let it run.

Think chatbots answering questions, voice bots making reminder calls, assistants scheduling meetings, or automation validating data. These systems are autonomous, but only within a clearly defined scope.

That’s exactly why they work well.

They’re predictable.

They’re efficient.

They do what they’re told.

But they don’t step back and ask whether the task still makes sense in the bigger picture. They don’t reassess priorities or change direction unless you explicitly tell them to. In simple terms, AI agents execute actions. They don’t own outcomes.

Where AI Agents Start to Struggle

Problems begin when teams expect AI agents to behave like full systems.

Real-world workflows aren’t neat. They change based on timing, context, and human behaviour. Patients respond differently. Customers don’t follow scripts. Exceptions are the norm, not the edge case.

What usually happens next is that teams try to “make the agent smarter.” They add more rules, more conditions, and more exceptions. Over time, logic becomes fragile and hard to manage.

At that point, the issue isn’t intelligence. It’s that the problem has quietly outgrown a task-based approach.

Agentic AI: Shifting from Tasks to Outcomes

Agentic AI approaches the problem from a different angle. Instead of focusing on what action to perform, it focuses on what outcome needs to be achieved.

An agentic AI system doesn’t rely on a single agent. It coordinates multiple agents, tools, and decisions to move toward a goal. It can decide what needs to happen next, adjust based on feedback, and escalate when required.

This is the key shift:

AI agents follow instructions.

Agentic AI manages intent.

It’s less about automation and more about orchestration.

A Real-World Example Makes This Clear

Take post-discharge patient follow-ups. A simple AI agent can place a call, ask a fixed set of questions, record responses, and end the interaction. That’s useful, but limited.

An agentic AI system looks at the broader objective: ensuring patient recovery and identifying risks early.

It decides when to call based on discharge data, adapts questions based on the patient’s condition, responds differently if the patient sounds confused or uncomfortable, and escalates to a care team only when needed. It may even schedule another follow-up automatically. No single agent does all of this. The system coordinates actions across agents and steps.

That’s agentic AI in practice.

Another Way to Think About It

A simple analogy helps.

An AI agent is like a skilled employee doing a specific job.

Agentic AI is like a team lead coordinating work to deliver a result.

You don’t need a team lead for every task. But once complexity increases, coordination becomes more important than execution speed.

When AI Agents Are the Right Choice

It’s important to say this clearly: not everything needs agentic AI.

AI agents are the right solution when tasks are well defined, environments are stable, and consistency matters more than judgment. Many support bots, automations, and assistants fall into this category, and that’s perfectly fine. Starting with agentic AI too early can actually slow teams down.

When Agentic AI Becomes Necessary

Agentic AI makes sense when problems involve multiple steps, changing context, human handoffs, or real-world consequences.

Healthcare, enterprise operations, logistics, and complex customer journeys fall squarely into this category. These are not “do one thing well” problems. They’re “do the right thing at the right time” problems.

That’s where task-level automation starts to break down.

How Cubet Approaches This with Whizz

This distinction is exactly why we built Whizz at Cubet.

Whizz is not a single AI agent. It’s an agentic AI framework designed to coordinate multiple agents safely and predictably within real systems.

Using Whizz, we help organisations move beyond isolated automations to outcome-driven workflows. The framework handles orchestration, memory, escalation, and integration, while keeping humans firmly in control.

In healthcare, this means Whizz can manage patient follow-ups end to end, triggering conversations, adapting in real time, escalating only when needed, and documenting everything automatically. Clinicians stay focused on care, while the system ensures continuity. The same principles apply across enterprise use cases, wherever workflows are connected, and outcomes matter more than individual actions.

Closing Thought

AI agents are powerful tools. Agentic AI is a powerful way of thinking about systems. Most AI initiatives don’t fail because models are weak. They fail because task-level tools are forced to solve system-level problems.

The real question isn’t whether agentic AI is “better.” It’s whether the problem you’re solving requires coordination, adaptation, and ownership.

Curious how agentic systems work in real environments? Connect with us to know how Cubet uses Whizz to turn intent into outcomes.

Have a project concept in mind? Let's collaborate and bring your vision to life!

Connect with us & let’s start the journey

Share this article

Get in touch

Kickstart your project

with a free discovery session

Describe your idea, we explore, advise, and provide a detailed plan.